Latest

23

Feb

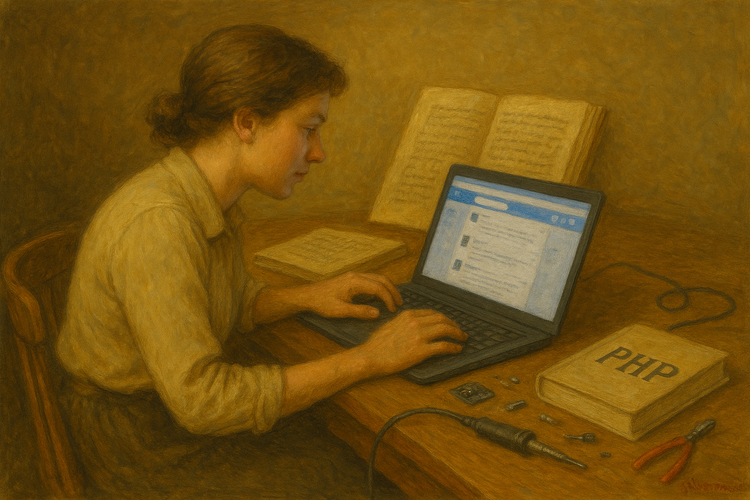

AI Inverts the Disciplinary Hierarchy

Maybe we should be more cautious about defunding fields just because we can't immediately see their application.

3 min read

17

Feb

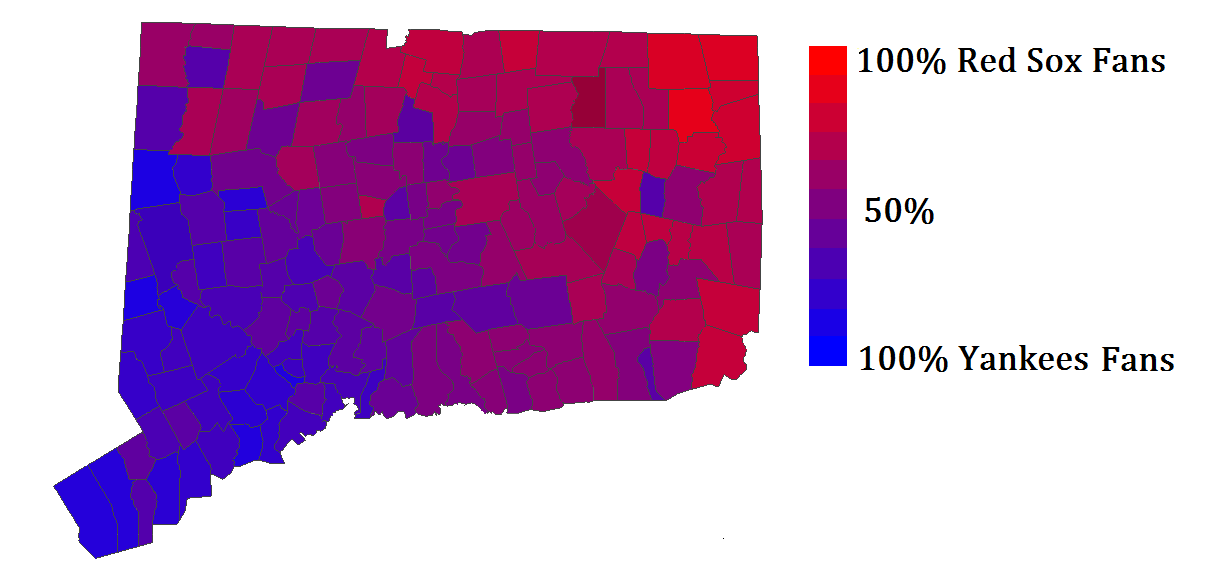

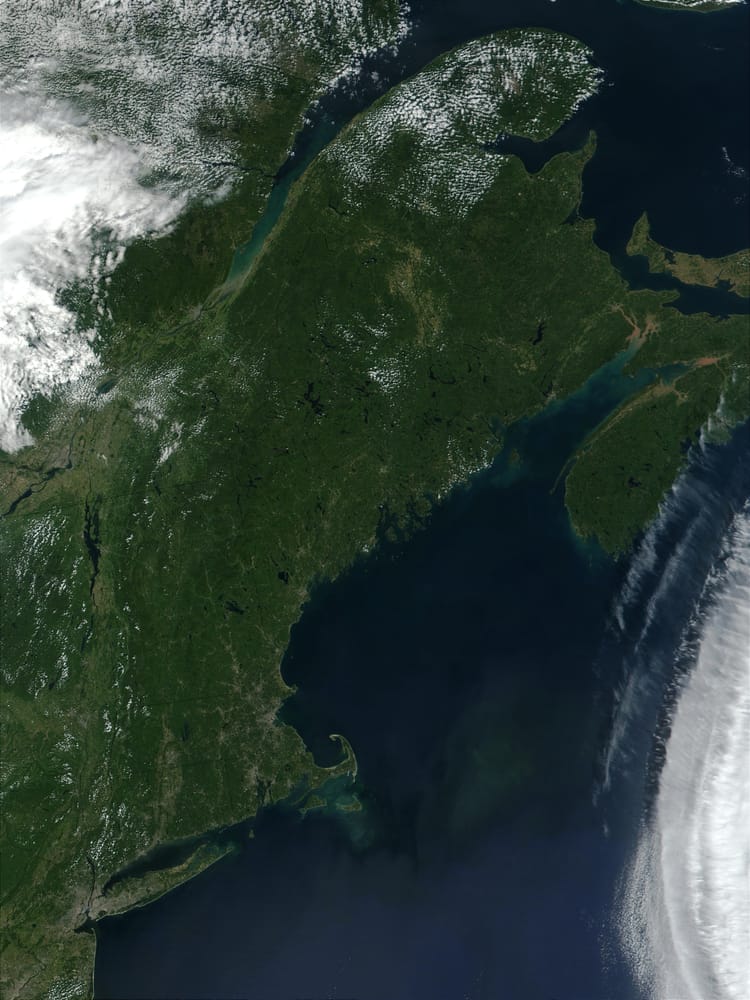

Contingency and the Cartographic Making of New England (Part I)

New England's borders aren't natural. They're historical accidents.

6 min read

30

Jan

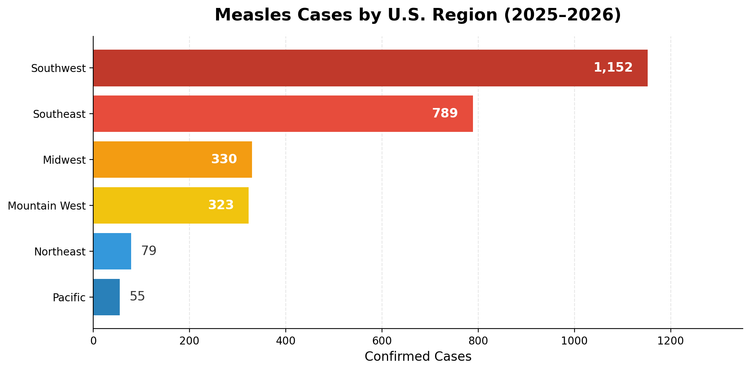

The Measles Crisis Is Regional—Let's Keep It That Way

Measles is a national issue. It's not a national phenomenon.

3 min read

29

Jan

Trust and AI: A Conversation with Claude

How is trusting AI different than trusting people?

10 min read

21

Jan

Inefficient by Design: How Medieval Values Shaped Today's University

A nine-century case for being slow

11 min read

22

Dec

AI Lessons from 1999

Nothing will work, but everything might.

6 min read

15

Dec

Netflix and HBO

Hollywood's choice isn't consumer choice

2 min read

14

Dec

The Forest and the Tree: What the Latest Accommodation Debate Gets Wrong

A lot has been written this week about the increase in student accommodations on college campuses. It started with a

3 min read

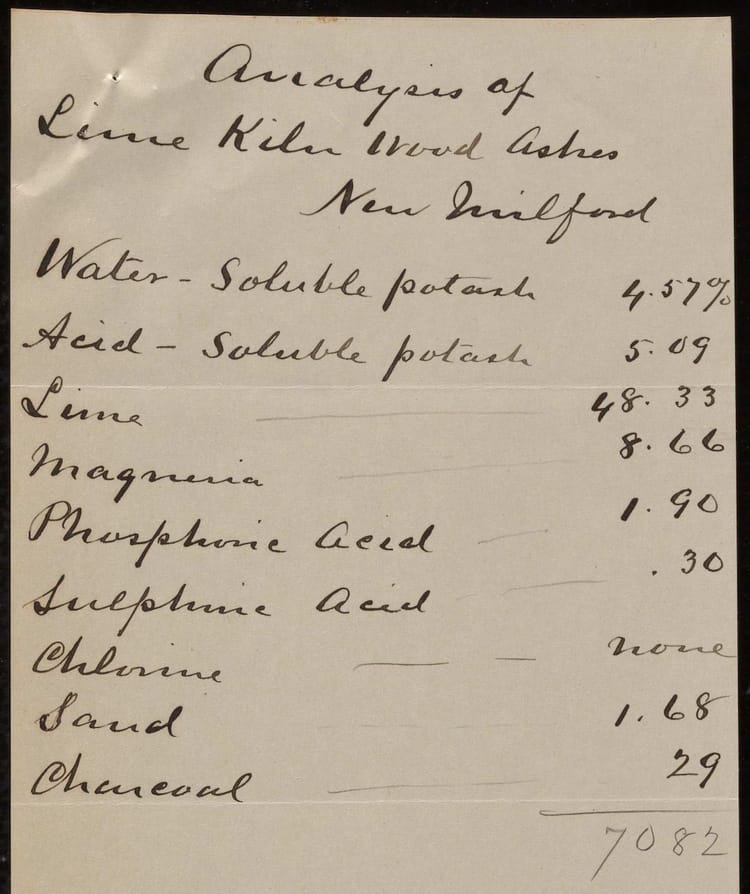

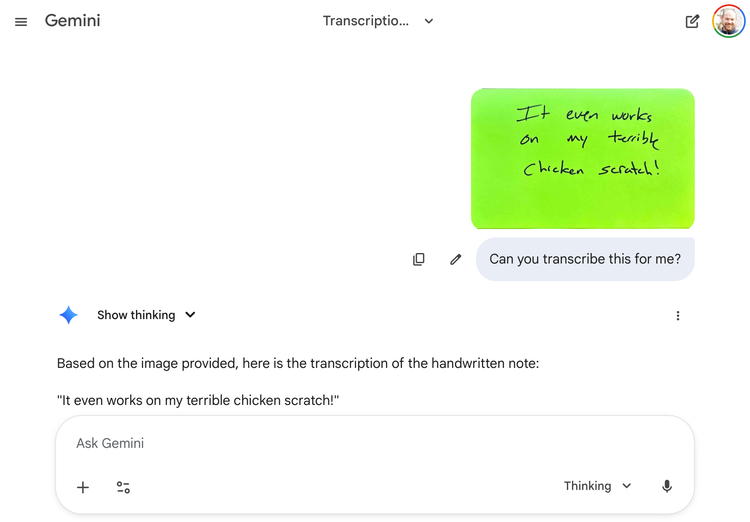

08

Dec

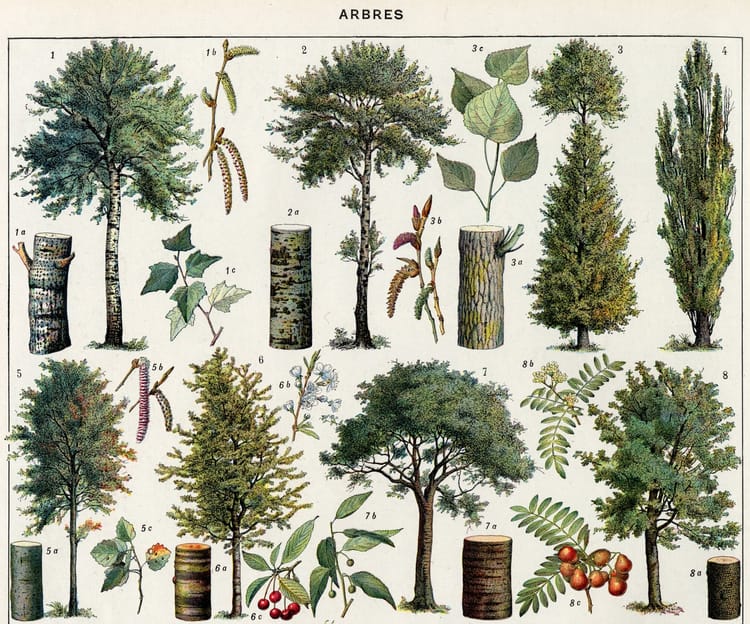

Seeing Old Science

Gemini’s ability to read handwritten archival documents has importance beyond the humanities

6 min read

06

Dec

Handwriting Recognition Roundup

Like it or not, a new world for archives and historical scholarship is here

4 min read