AI Coding Collaboration for Digital Humanists (Part 2): From Prototype to Production

Author's note: This post is the second in a series on AI Coding Collaboration for Digital Humanists (and other competent imposters). The series chronicles the development of AcadiMeet, a scheduling application designed for the particular needs of university faculty and staff members, built from concept to production over five sessions with an AI partner. It extracts from my chats the strategic methods, communication patterns, and architectural principles necessary for domain specialists—who know what to build but may lack current coding fluency—to successfully launch a software development project with the help of generative AI.

In part one of this series, I argued that your first session coding with the help of an an AI shouldn't be about code at all. It should be about vision and conceptual clarity. Allowing adequate time to establish your project's design philosophy will give you and the AI the shared intellectual groundwork necessary to build an application that works for its users. Only then should you move on to implementation, which will involve two phases: prototyping and execution.

Rapid Prototyping

After clarifying the project's vision you may yet again be tempted to jump into code. But as a "competent imposter," a domain expert with a keen knowledge of your application's audience, your strength lies not in your development chops, but in your sense of the optimal user experience (UX).

Claude excelled in a rapid prototyping process, allowing me to see, test, and refine the end-to-end AcadiMeet user experience six times in little more than three hours. Because we had already established a shared vision in our first session, rather than struggling to explain a complex workflow in abstract terms, I simply asked Claude to generate an interface. In each successive iteration, I moved Claude closer to the UX I had in my head, pointing out design flaws and adding new features along the way.

Claude didn't always immediately give me what I wanted. For example, I had to push back quite strenuously when Claude suggested eliminating the profile creation workflow that encourages users to pre-populate their semester schedules with class meeting times and protected research blocks. Claude correctly ascertained that these features would add considerable complexity to the application and hours to the timeline. But as the domain expert, I knew that this feature was a differentiator, the thing that would set AcadiMeet apart from usable but insufficient tools like Doodle. In this way, the rapid prototyping phase affirmed my role. The AI may be an implementation expert, but you know what your product and its users need. That position of authority is an essential perspective to maintain throughout the AI coding collaboration process, lest you be cowed by the apparent omniscience of the machine.

Phase 2: Executing with Precision and Rhythm

Only after the UX was finalized did we discuss the tech stack. My earlier disclosure about my limited, non-current coding background and plan to work solo was crucial in getting this right. The AI used this information to recommend a modern but manageable stack (React, Node.js/Express, Prisma, SQLite), which it helped me install in my local development environment. Claude also assisted me with setting up a deployment workflow in GitHub and Railway. This process was not without missteps, and I repeatedly had to ask Claude for "step-by-step" instructions.

With the technology finally in place, our focus shifted to precise execution. Here, the challenge for me was not to write the code (Claude did at least 90% of that work) but to learn how to communicate with Claude in a way that would generate code that would actually work. Through trial and error, three strategies emerged for generating clean working code.

Work in complete files, not code snippets. My initial inclination in building out the prototype was to ask Claude for pieces of code that would do this or that. This proved disastrous, leading to frustrating exchanges because I couldn't spot the ripple effects of the code I was adding or changing. It quickly became clear that I should always share complete files and request complete files back. By sharing an entire file (e.g., auth.js), the AI could spot global patterns, such as a variable mismatch (passwordHash vs. hashedPassword) repeated in multiple places. If only a snippet had been shared, I might have fixed one instance, immediately run into another, and spent unnecessary time debugging internal synchronization issues. Sharing a whole file unlocks the AI's capacity for instant holistic analysis, turning a series of fragmented edits into a single, cohesive, synchronized update. (As an aside, working in code snippets actually seems to be Claude's preference, as it had been mine at the start. Each time I open a new coding session with Claude I now have to retrain it to receive and send back full files. I suspect this may be a design choice intended to save on token, and perhaps, electricity use.)

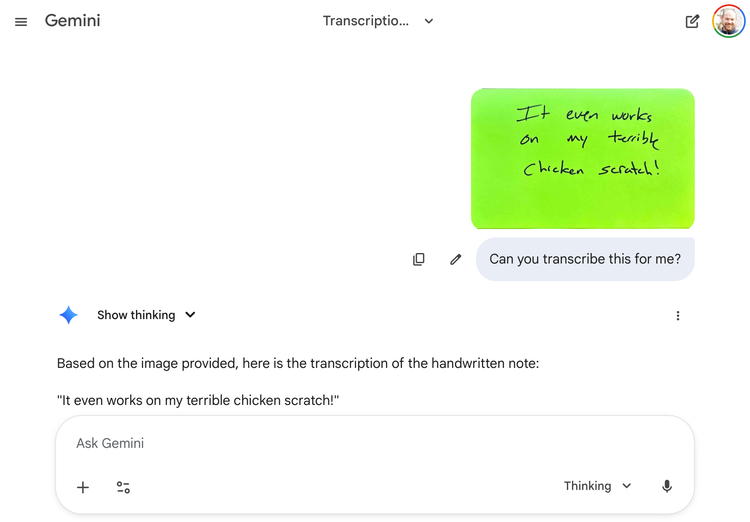

Use the "eyes" of the AI. Because the user interface for today's AI chat bots are predominately text focused (and probably also because we humanists are so comfortable working entirely in text), it is easy to forget that AIs can "see." Claude loves a screenshot. This is a killer feature for web development. You don't have to describe everything you want perfectly in words. You can upload a screenshot of your prototype and point to a particular feature or visual quirk. You can upload screenshots of the visual interfaces of the tools you're struggling with and ask for help. I often gave Claude screenshots of SendGrid's key setup interface or the Railway deployment dashboard or my DNS settings to ask for help fixing something. Lengthy verbal descriptions of complex visuals can obscure more than they illuminate. The answers you get back can throw you further off track. Better to share screenshots so that the AI can give you precise, specific, click-by-click instructions.

Establish a rhythm. It seems strange to talk about dancing with a chat bot, but that's how it sometimes felt when I was troubleshooting problems with Claude. As problems arose—and they often did—we developed a reliable, systematic rhythm for troubleshooting. First, Claude would suggest an action. I would try it. It would fail. I would share the complete error output verbatim (e.g. the browser console error or server log). Claude would suggest a fix. I would apply the fix immediately and report the results. We would repeat this dance until the bug was fixed.

The dance metaphor is apt in two ways. First, precision is crucial: you cannot paraphrase an error message or output, you must report it completely and precisely. So is timing. You must react to your dance partner (the AI) immediately when acted up. Instant feedback is crucial to leverage the AI's short-term context retention window and avoid loosing the thread.

Key Lessons for the Competent Imposter

These are among the implementation patterns that I stumbled upon in building AcadiMeet. They ensured that most of my time was spent making product decisions rather than struggling with syntax or integration errors. I now make sure to train Claude on each one of these patterns at the start of a new session. Which leads me to the subject I'll tackle in my next post: mastering continuity across multiple AI development sessions.

Member discussion