AI Coding Collaboration for Digital Humanists (Part 4): Performance, Polish, and Problem Solving

Author's note: This post is the final installment in a series on AI Coding Collaboration for Digital Humanists (and other competent imposters). The series chronicles the development of AcadiMeet, a scheduling application designed for the particular needs of university faculty and staff members, built from concept to production over five sessions with an AI partner. It extracts from my chats the strategic methods, communication patterns, and architectural principles necessary for domain specialists—who know what to build but may lack current coding fluency—to successfully launch a software development project with the help of generative AI.

In the previous parts of this series, we moved from vision to prototype and described methods for efficient implementation and maintaining continuity. By the end of my fourth Claude chat, AcadiMeet was working.

But "working" is not "finished." Transforming a functional MVP into a reliable, user-friendly service involves making sure the application has the necessary security, polish, and reliability to earn user trust. It also means fixing things on the fly when they break in the face of real world use.

Security, Performance, and Polish

Before launching AcadiMeet, I asked Claude to conduct a thorough security and performance review of the code base. For security hardening, Claude suggested several key measures: rate limiting, Helmet.js security headers, secure environment variable management, and improved error handling that doesn't leak details in production. Claude provided updated files, which I tested systematically, verifying rate limiting by attempting failed logins and checking security headers in Chrome DevTools. For performance optimization, we added strategic database indexes to the Prisma schema to provide faster queries on large datasets. We also implemented frontend build optimization through code splitting, compression, and removing console logs for production. We considered but rejected some additional performance enhancements, including OAuth calendar integration, as too complex for the MVP. Throughout this process, we maintained the pattern of testing immediately after each change, reporting results, and debugging systematically by checking logs. The security and performance work took about two hours total, structured as discrete phases with clear checklists and verification steps at each stage.

The final phase of development was initiated by my request for mobile UI optimizations, but it ultimately became a more thoroughgoing effort to establish consistent patterns throughout the application and across devices. Mobile enhancements included responsive font sizing, grids that stack on mobile, flexible buttons, touch-friendly targets, sticky navigation, and reliable text wrapping. But beyond mobile responsiveness, we implemented visual polish across the entire application: skeleton loaders replaced generic "Loading..." text; a modern card-based UI with rounded corners and subtle shadows replaced the original flat divider-line design; hover effects provided clear interaction feedback; and smooth transitions made state changes more elegant. The result is an application that works well on a range of devices and, if I say so myself, looks pretty slick.

Things Fall Apart

I was feeling pretty good when AcadiMeet was released to the public. Unfortunately, the application was about to face, and fail, a real test of cloud computing.

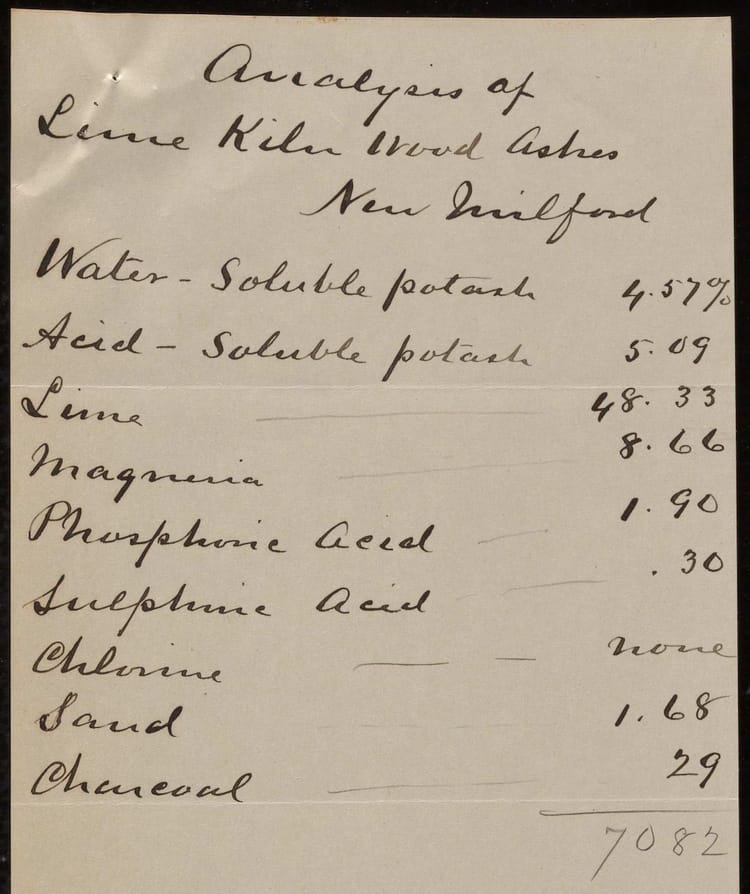

During development I made a pragmatic choice to use SQLite for a database backend. With no separate database server to configure, it seemed perfect for getting up and running quickly. The problem came after live deployment, when I pushed a minor text update to the production application. When I tried to log in to check the change, I found that my user account had vanished. The cause, revealed in the hosting logs, was a fundamental architectural mismatch: AcadiMeet's cloud platform (Railway) uses ephemeral filesystems. Any files written to the server's disk—including the SQLite database file—disappear with every new deployment. This means that all user data would be wiped out anytime I made even the smallest change to the application. And the application was already in use.

When I found the error, I spent a frantic hour extracting user emails from logs and notifying users of the bug (fortunately I caught it early enough that only about a dozen people had signed up and none of them had created any real polls yet). Then I turned back to Claude to implement a permanent fix, which involved a by-no-means trivial migration from SQLite to PostgreSQL, a separate service with persistent storage, decoupled from the application code. Within s few hours, AcadiMeet was up and running again.

This experience taught me a profound lesson. Your AI partner can help you build quickly, but you still need a good understand of your application's architecture and how all the different pieces fit together. Now I'm sure to ask for additional explanation for any major architectural decisions the AI recommends. In my latest project (which I'll write about when this series is finished), I've even taking to asking Claude to quiz me on the reasons we've made certain decisions and the ways in which different architectural elements and deployment technologies interact before we implement any major changes.

Conclusion: Competent Imposter Rising

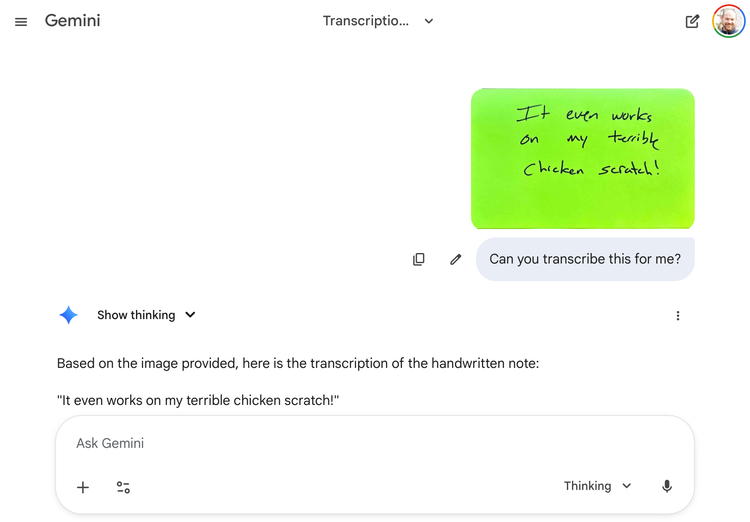

The AcadiMeet development process, spanning five chat sessions over the course of a week, taught me a lot about the possibilities and limitations of working with AI, at least in the current moment and at least for web development projects. The AI provides implementation speed, syntax consistency, pattern recognition, and debugging assistance. But a competent human imposter like me is still necessary for the vision, user empathy, architectural decisions, and quality standards.

Building AcadiMeet also taught me about my own learning style and how I work best. I learned that I think more clearly when I have to explain things to someone else—even if that "someone" is a robot. I learned that I'm more productive when I'm not paralyzed by questions of syntax and can focus on the bigger picture. I learned that rapid iteration suits me (and my likely case of undiagnosed ADHD) better than careful upfront planning.

To my delight, I also learned that I still love building things, even though I'd convinced myself that chapter of my career was over. The barrier was never lack of interest, nor even really lack of time. It was the fear of picking back up after years away from active development. AI removed that fear.

Now I have a working application serving real users. More importantly, I have a new way of working that I can apply to future projects. And I have proof that the gap between having an idea and having a real digital humanities project has shrunk dramatically in the age of AI. The old binary of hack vs. yack is collapsing, and I'm eager to see what the new unity means for digital humanities.

Member discussion